OpenAI has introduced its latest small AI model, GPT-4o mini. The company announced that GPT-4o mini, which is both cheaper and faster than its current advanced models, is now available to developers and through the ChatGPT web and mobile app for consumers. Enterprise users will gain access next week.

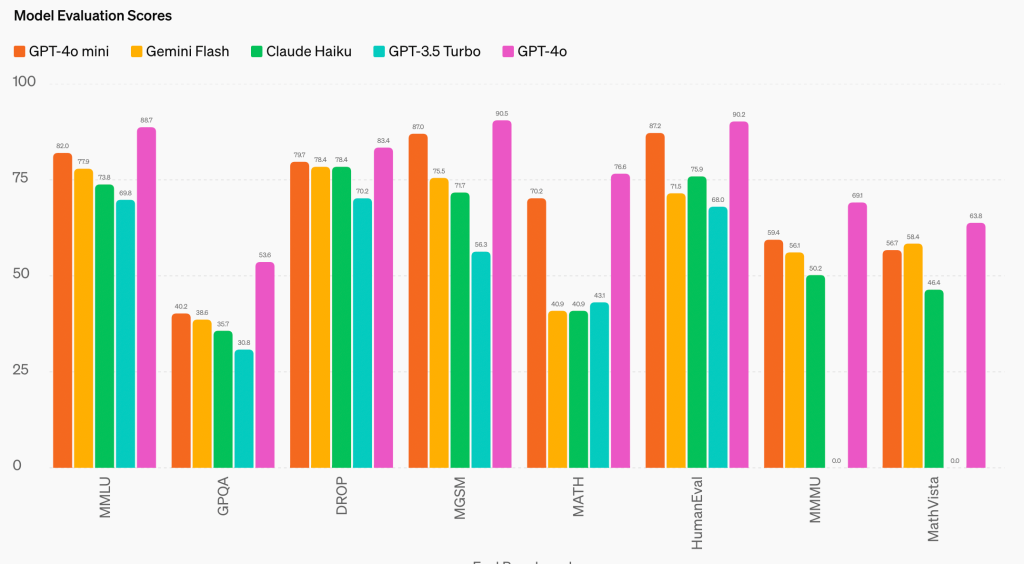

According to OpenAI, GPT-4o mini excels in reasoning tasks involving both text and vision, outperforming other leading small AI models. As small AI models improve, they are becoming increasingly popular among developers due to their speed and cost efficiencies compared to larger models such as GPT-4 Omni or Claude 3.5 Sonnet. These smaller models are ideal for high-volume, simple tasks that require repeated AI calls.

Moreover, OpenAI claims that GPT-4o mini is significantly more affordable to operate than previous models, being more than 60% cheaper than GPT-3.5 Turbo. Currently, GPT-4o mini supports text and vision in the API, with plans to add video and audio capabilities in the future.

“We envision a future where models become seamlessly integrated in every app and on every website,” the company said in its announcement. “GPT-4o mini is paving the way for developers to build and scale powerful AI applications more efficiently and affordably. The future of AI is becoming more accessible, reliable, and embedded in our daily digital experiences, and we’re excited to continue to lead the way.”

For developers using OpenAI’s API, GPT-4o mini is priced at 15 cents per million input tokens and 60 cents per million output tokens. It has a context window of 128,000 tokens, roughly equivalent to the length of a book, and a knowledge cutoff date of October 2023.

While OpenAI did not disclose the exact size of GPT-4o mini, it mentioned that it is in the same tier as other small AI models such as Llama 3 8b, Claude Haiku, and Gemini 1.5 Flash. However, the company claims GPT-4o mini is faster, more cost-efficient, and smarter than these industry-leading small models, based on pre-launch testing in the LMSYS.org chatbot arena. Early independent tests support these claims.

The company explained, “GPT-4o mini enables a broad range of tasks with its low cost and latency, such as applications that chain or parallelize multiple model calls (e.g., calling multiple APIs), pass a large volume of context to the model (e.g., full code base or conversation history), or interact with customers through fast, real-time text responses (e.g., customer support chatbots).”

In a separate announcement, OpenAI introduced new tools for enterprise customers on Thursday. In a blog post, the company revealed the Enterprise Compliance API, designed to help businesses in highly regulated industries such as finance, healthcare, legal services, and government meet logging and audit requirements.

These tools will enable admins to audit and manage their ChatGPT Enterprise data, providing records of time-stamped interactions, including conversations, uploaded files, workspace users, and more.

OpenAI is also offering admins more granular control for workspace GPTs, custom versions of ChatGPT created for specific business use cases. Previously, admins could only fully allow or block GPT actions in their workspace. Now, workspace owners can create an approved list of domains that GPTs can interact with.