New Service Aims to Help Businesses Create Tailored AI Models

Nvidia has introduced its new AI Foundry service, targeting businesses that want to develop and deploy custom large language models. In short, providing the infrastructure and tools for companies to develop customized AI models with their own data, leveraging Nvidia’s DGX Cloud. The initiative reflects Nvidia’s strategy to expand its presence in the growing enterprise AI market.

AI Innovation Times sees this as a strategic move for Nvidia, potentially creating new revenue streams beyond its core GPU business. The company is positioning itself as a comprehensive AI solutions provider, not just a hardware manufacturer.

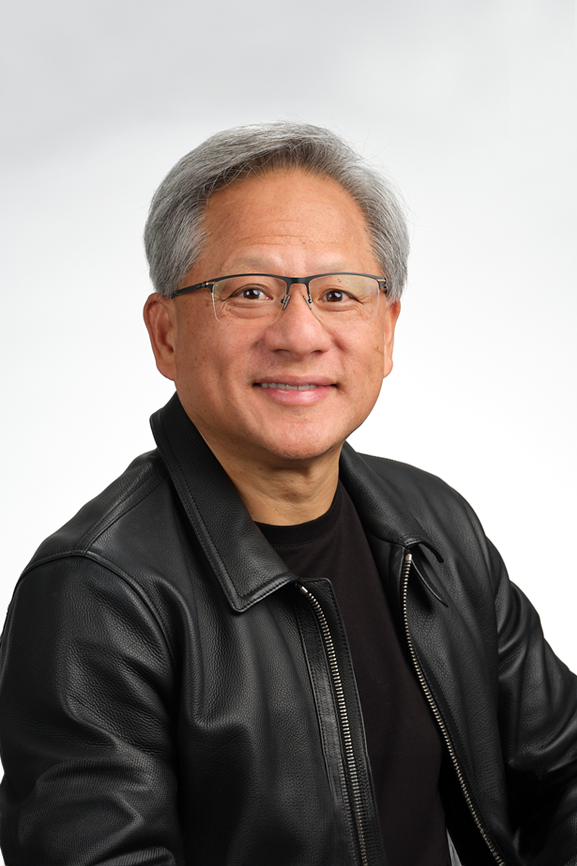

Enterprises need custom models to perform specialized skills trained on the proprietary DNA of their company — their data. Nvidia’s AI Foundry service combines our generative AI model technologies, LLM training expertise and giant-scale AI factory. We built this in Microsoft Azure so enterprises worldwide can connect their custom model with Microsoft’s world-leading cloud services.

Jensen Huang, founder and CEO of NVIDIA

The AI Foundry integrates Nvidia’s hardware, software tools, and expertise, allowing companies to customize popular open-source models like Meta’s Llama 3.1. This service arrives as businesses increasingly seek to utilize generative AI while maintaining control over their data and applications.

Nvidia’s AI Foundry is designed to simplify adapting these models for specific business needs, claiming significant improvements in model performance through customization. The service offers access to pre-trained models, high-performance computing resources via Nvidia’s DGX Cloud, and the NeMo toolkit for model customization and evaluation. Additionally, Nvidia’s AI specialists provide expert guidance.

Nvidia also introduced NIM (Nvidia Inference Microservices), which packages customized models into containerized, API-accessible formats for easy deployment.

“The world’s leading enterprises see how generative AI is transforming every industry and are eager to deploy applications powered by custom models,” said Julie Sweet, chair and CEO of Accenture. “Accenture has been working with NVIDIA NIM inference microservices for our internal AI applications, and now, using NVIDIA AI Foundry, we can help clients quickly create and deploy custom Llama 3.1 models to power transformative AI applications for their own business priorities.”

The announcement coincides with Meta’s release of Llama 3.1 and comes amid increasing concerns about AI safety and governance. By offering a service that allows companies to create and control their own AI models, Nvidia is tapping into a market of enterprises seeking the benefits of advanced AI without the risks associated with public, general-purpose models.

However, the widespread deployment of custom AI models could lead to challenges such as fragmentation of AI capabilities across industries and maintaining consistent standards for AI safety and ethics.

As competition in the AI sector intensifies, Nvidia’s AI Foundry represents a significant bet on the future of enterprise AI adoption. The success of this initiative will depend on how effectively businesses can leverage these custom models to drive real-world value and innovation in their industries.