As AI-generated content gains traction, startups are raising the bar on their technology. Recently, RunwayML introduced a new, more realistic model for video generation. Now, London-based Haiper, an AI video startup founded by former Google DeepMind researchers Yishu Miao and Ziyu Wang, has launched a new visual foundation model: Haiper 1.5.

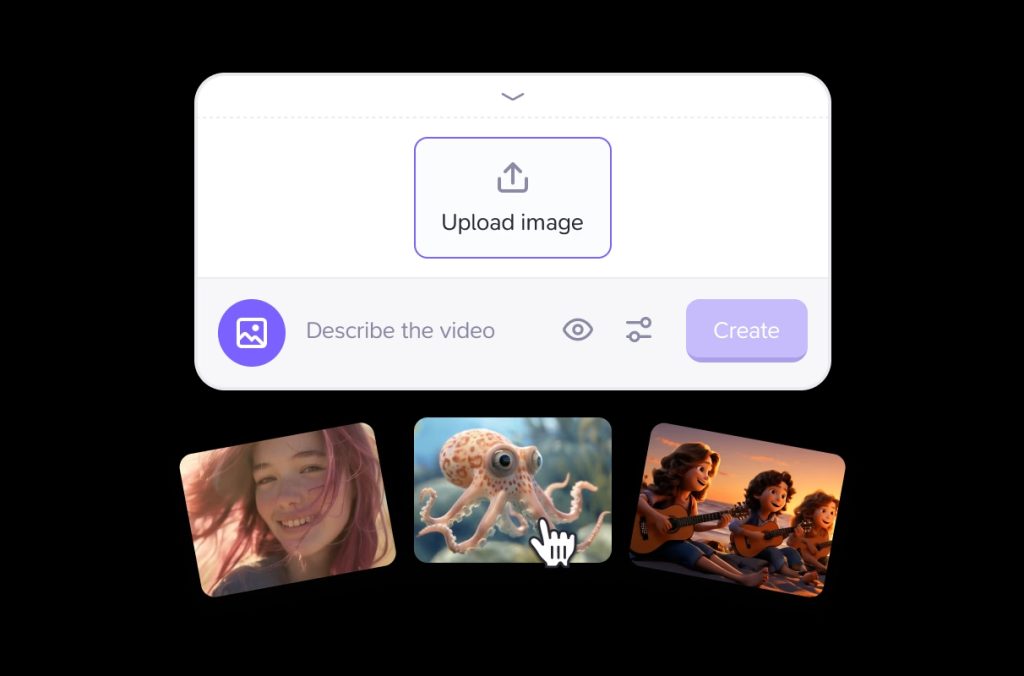

Available on the company’s web and mobile platforms, Haiper 1.5 is an incremental update that allows users to generate 8-second-long clips from text, image, and video prompts—double the length of Haiper’s initial model. The company also announced a new upscaler capability to enhance content quality and plans to venture into image generation.

Haiper, which emerged from stealth just four months ago, has already onboarded over 1.5 million users on its platform. Despite being at a nascent stage and not as heavily funded as other AI startups, this signals strong positioning. The company aims to grow its user base with an expanded suite of AI products, challenging competitors like Runway.

Launched in March, Haiper offers a platform for video generation powered by an in-house perceptual foundation model. Users simply enter a text prompt, and the model produces content based on it, complete with prompts to adjust elements such as characters, objects, backgrounds, and artistic styles. Initially, Haiper produced 2-4 second-long clips, but creators wanted longer content. The latest model addresses this by extending clip lengths to eight seconds.

Originally, Haiper produced high-definition videos of just two seconds, while longer clips were in standard definition. The latest update now allows users to generate clips of any length in SD or HD quality. An integrated upscaler enables users to enhance all video generations to 1080p with a single click, and it works with existing images and videos uploaded by users.

In addition to the upscaler, Haiper is adding a new image model to its platform. This will enable users to generate images from text prompts and then animate them through the text-to-video offering for perfect video results. Haiper says the integration of image generation into the video generation pipeline will allow users to test, review, and rework their content before moving towards the animation stage.

While Haiper’s new model and updates are promising, especially given the samples shared by the company, they have yet to be widely tested. The image model was unavailable on the company’s website, and the eight-second-long generations and upscaler were restricted to those on the Pro plan, priced at $24/month, billed yearly.

Currently, the platform’s two-second videos are more consistent in quality than the longer ones, which can blur or lack detail, especially in motion-heavy content. However, with these updates and more planned for the future, Haiper expects the quality of its video generations to improve. The company aims to enhance its perceptual foundation model’s understanding of the world, essentially creating AGI that could replicate the emotional and physical elements of reality for true-to-life content.