A group of tech professionals – mostly current and former OpenAI employees but with careers spanning several other leading AI firms including Google and Anthropic – have voiced concerns through an open letter about the potential dangers posed by advanced AI technologies. In a call for enhanced industry oversight, they highlight both the opportunities and threats associated with AI.

The letter expresses a dual perspective: “We believe in the potential of AI technology to deliver unprecedented benefits to humanity,” it reads. Yet, it also acknowledges significant risks such as “exacerbating current inequalities, manipulation and misinformation, and loss of control of autonomous AI systems that could wipe out humanity.”

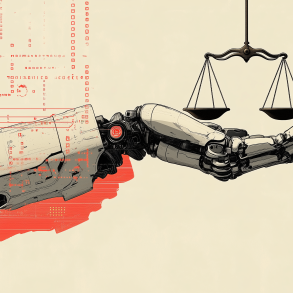

The authors argue that the financial interests of AI companies can deter them from adopting rigorous oversight. They note, “AI companies possess substantial non-public information about the capabilities and limitations of their systems, the adequacy of their protective measures, and the risk levels of different kinds of harm.”

Despite this, these companies face “only weak obligations to share some of this information with governments, and none with civil society,” the letter claims, stressing the importance of whistleblower protections to ensure these organizations are held accountable.

The letter outlines specific actions AI companies should take to foster a culture of safety and accountability:

- Refraining from enforcing non-critique agreements on risk issues.

- Enabling anonymous and verifiable means for employees to report risks to the company’s board, regulators, or an independent expert group.

- Encouraging open criticism and risk discussion among current and former employees without fear of retaliation.

The call for reform is backed by AI pioneers such as Geoffrey Hinton, Yoshua Bengio, and Stuart Russell – although their views are seen as controversial by some other researchers, like Yann LeCun, who has said that similar criticisms go too far into the realm of fearmongering.

The dialogue continues within the companies themselves. OpenAI, recently criticized for non-disclosure agreements and disbanding its AI risk team, is reportedly making changes, including the removal of gag clauses and the establishment of a Safety and Security Committee.

An OpenAI spokesperson emphasized the importance of dialogue and transparency: “We agree that rigorous debate is crucial given the significance of this technology and we’ll continue to engage with governments, civil society and other communities around the world.”